![]() This is post 1 of a series of 3 on upgrading servers to ForgeRock Directory Services 7.

This is post 1 of a series of 3 on upgrading servers to ForgeRock Directory Services 7.

DS 7 is a major release, much more cloud-friendly than ever before, and different in significant ways from earlier releases.

To upgrade successfully, make sure you understand the key differences beforehand. With these in mind, plan the upgrade, how you will test the upgraded version, and how you will recover if the upgrade process does not go as expected:

Fully Compatible Replication

Some things never change. The replication protocol remains fully compatible with earlier versions back to OpenDJ 3.

This means you can still upgrade servers while the directory service is online, but the process has changed.

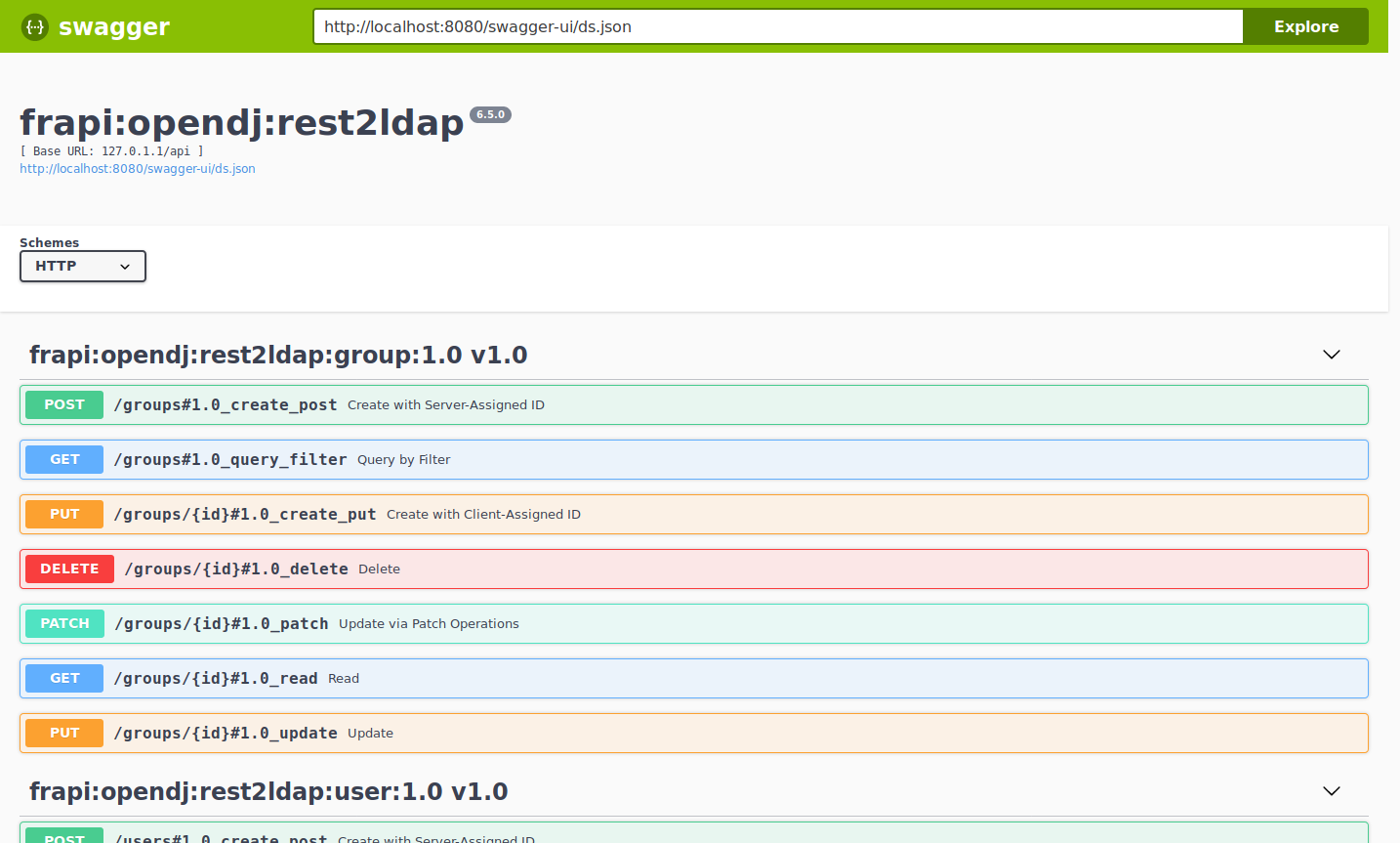

In 6.5 and earlier, you set up DS servers that did not yet replicate. Then, when enough of them were online, you configured replication.

In 7, you configure replication at setup time before you start the server. For servers that will have a changelog, you use the setup --replicationPort option for the replication server port. For all servers, you use the setup --bootstrapReplicationServer option to specify the replication servers that the server will contact when it starts up.

The bootstrap replication servers maintain information about the servers in the deployment. The servers learn about the other servers in the deployment by reading the information that the bootstrap replication server maintains. Replicas initiate replication when they contact the first bootstrap replication server.

As directory administrator, you no longer have to configure and initiate replication for a pure DS 7 deployment. DS 7 servers can start in any order as long as they initiate replication before taking updates from client applications.

Furthermore, you no longer have to actively purge servers from other servers’ configurations. The other servers “forget” a server that disappears for longer than the replication purge delay, and eventually purge its state.

These new capabilities bring you more deployment flexibility than ever before. As a trade off, you must now think about configuring replication at setup time, and you must migrate scripts and procedures that used older commands to the new dsrepl command.

Unique String-Based Server IDs

By default, DS 7 servers use unique string-based server IDs.

In prior releases, servers had multiple numeric server IDs. Before you add a new DS 7 server to a deployment of older servers, you must assign it a “numeric” server ID.

Secure by Default

The setup --production-mode option is gone. All setup options and profiles are secure by default.

DS 7 servers require:

- Secure connections.

- Authentication for nearly all operations, denying most anonymous access by default.

- Additional access policies when you choose to grant access beyond what setup profiles define.

- Stronger passwords.

New passwords must not match known compromised passwords from the default password dictionary. Also in 7, only secure password storage schemes are enabled by default, and reversible password storage schemes are deprecated. - Permission to read log files.

Furthermore, DS 7 encrypts backup data by default. As a result of these changes, all deployments now require cryptographic keys.

Deployment Key Required

DS 7 deployments require cryptographic keys. Secure connections require asymmetric keys (public key certificates and associated private keys). Encryption requires symmetric (secret) keys that each replica shares.

To simplify key management and distribution, and especially to simplify disaster recovery, DS 7 uses a shared master key to protect secret keys. DS 7 stores the encrypted secret keys with the replicated and backed up data. This is new in DS 7, and replaces cn=admin data and the keys for that backend.

A deployment key is a random string generated by DS software. A deployment key password is a secret string at least 8 characters long that you choose. The two are a pair. You must have a deployment key’s password to use the key.

You generate a shared master key, and optionally, asymmetric key pairs, with the dskeymgr command using your deployment key and password. Even if you provide your own asymmetric keys for securing connections, you must use the deployment key and password to generate the shared master key.

When you upgrade, or add a DS 7 server to a deployment of pre-7 servers, you must intervene to move from the old model to the new, and unlock all the capabilities of DS 7.

New Backup

As before, backups are not guaranteed to be compatible across major and minor server releases. If you must roll back from an unsuccessful upgrade, roll back the data as well as the software.

When you back up DS 7 data, the backup format is different. The new format always encrypts backup data. The new format lets you back up and restore data directly in cloud storage if you choose.

Backup operations are now incremental by design. The initial backup operation copies all the data, incrementing from nothing to the current state. All subsequent operations back up data that has changed.

Restoring a backup no longer involves restoring files from the full backup archive, and then restoring files from each incremental backup archive. You restore any backup as a single operation.

The previous backup and restore tools are gone. In their place is a single dsbackup command for managing backup and restore operations, for verifying backup archives, and for purging outdated backup files.

Upgrade Strategies

When you upgrade to a new DS version, you choose between in-place upgrade, unpacking the new software over old, then running the upgrade command, or upgrade by adding new servers and retiring old ones.

Keep Reading

Now that you understand the key differences in DS 7, try a demo or two:

This is post 2 of a series of 3 on upgrading servers to

This is post 2 of a series of 3 on upgrading servers to  at the top right to start.)

at the top right to start.)